Do you find your Red Hat Satellite infrastructure environment in desperate need of an upgrade and possibly out of support? If so, this guide is here to help you get back up to a supported version of the OS and the Satellite software.

This guide will show you how to upgrade your Satellite server from Red Hat Enterprise Linux 7 & Satellite 6.10, up to a supported configuration – Red Hat Enterprise Linux 8 & Satellite 6.13.

Most of us may have grown accustomed to seamless updates, real-time data synchronisation, and immediate access to online resources. However, there are many critical infrastructures, secure environments, and sensitive data centres that operate in ‘air-gapped’ or disconnected states for security and compliance reasons. In such settings, maintaining software and ensuring that systems are up-to-date with the latest patches becomes a unique challenge.

This guide aims to demystify the process, offering step-by-step instructions and best practices to ensure your RedHat Satellite remains current, even when it’s isolated from the outside world. Whether you’re an IT administrator working in defence, finance, or any other high-security sector, this guide will help you to navigate the nuances of updating Red Hat Satellite without a direct internet connection.

Step 1 – Preparation

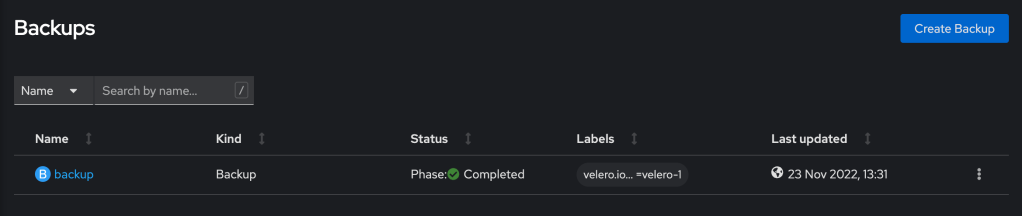

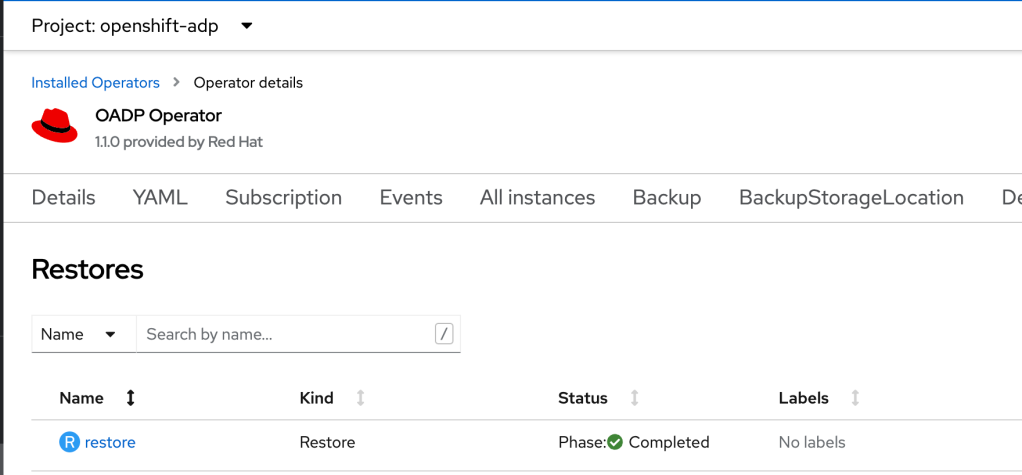

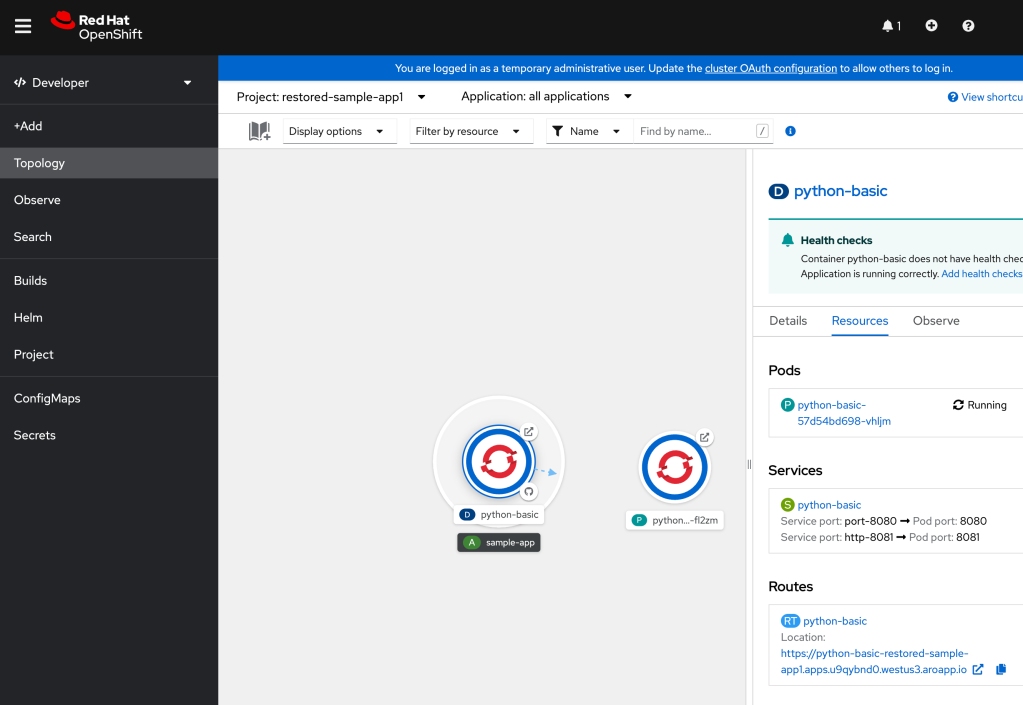

Backup / Snapshot:

Before doing anything, take a backup of the Satellite server. If it is a virtual machine, it can be useful to take a snapshot before doing any changes, in order to revert back quickly if something goes wrong.

Understanding Dependencies / Pre-Requisites:

Ensure that the Satellite server is patched to the latest version of RHEL7 & download all relevant DVD isos of OS and Software required : rhel7, rhel8, Satellite 6.11 (rhel7 & rhel8), Satellite 6.12 (rhel8) & Satellite 6.13 (rhel8).

Assessing the current environment :

It is important to understand what ‘disconnected environment’ really means. For this example, the Satellite server has no access to the Red Hat CDN or any upstream / sync’d repositories. Content has to be synchronised and copied onto the server directly or placed on a host that the Satellite server can access via http. For this guide, the Satellite server has no access to any repositories at all, so we will be working on the assumption that everything has to be copied locally onto the server.

Ensure there is enough disk space on the host especially the / and /var partitions. The upgrade from rhel7 to rhel8 requires a change to the postgresql data directory, so ensure that there is enough space (20GB) in the /var/lib/pgsql partition

Additionally, allow for an additional 10GB for hosting the latest rhel7 and rhel7-extras repositories. In this example, this will be in /var/repos.

Step 2 – Update the RHEL7 OS to latest patches

Before upgrading, it is imperative that the rhel7 server is updated to the latest patches. The LEAPP meta data checks which versions of the packages are required and will upgrade them if needed. However in this example of a disconnected environment, the server has no access to the Red Hat content delivery network, and neither does it subscribe to an upstream satellite server, so the latest rhel-7.9 content has to be imported manually to do the latest updates.

To do this, a helper host which has connectivity to the Red Hat CDN is required, with the reposync and yum-utils packages installed. This host is used to create a local mirror of the rhel7 repository and then transfer the content to the disconnected Satellite server.

On the connected host – Ensure it has a valid subscription and is registered, using subscription manager. Enable the rhel-7-server-rpms and rhel-7-server-extras-rpms repositories.

# subscription-manager repos enable rhel-7-server-rpms rhel-7-server-extras-rpmsCreate the local mirror repositories:

# mkdir -p /var/repos

# reposync --gpgcheck -l --repoid=rhel-7-server-rpms --download_path=/var/repos --downloadcomps --download-metadata

# reposync --gpgcheck -l --repoid=rhel-7-server-extras-rpms --download_path=/var/repos --downloadcomps --download-metadata

# createrepo -v /var/repos/rhel-7-server-rpms -g comps.xml

# createrepo -v /var/repos/rhel-7-server-extras-rpms -g comps.xml

# tar -czvf rhel-7-repos.tar.gz /var/repos/*Copy the tar files to the disconnected satellite server and untar in the /media directory (create the /media directory if required – ensuring there is enough space on the partition)

On the disconnected Server : Create the repository file in /etc/yum.repos.d

# cat <<EOF > /etc/yum.repos.d/rhel7.repo[rhel-7-server-rpms] baseurl=file:///media/rhel-7-server-rpms name=rhel-7-server-rpms enabled=1 gpgcheck=0[rhel-7-server-extras-rpms] baseurl=file:///media/rhel-7-server-extras-rpms name=rhel-7-server-extras-rpms enabled=1 gpgcheck=0 EOF

Update the host to the latest rhel7 packages:

# yum update -yStep 3 – Download & Mount ISOs

Download the Red Hat 8.8 and Satellite 6.11, 6.12 & 6.13 dvd isos from the Red Hat portal. As of writing this, Satellite 6.11.5.4 is the latest version for RHEL7.

Download 6.11.5.4 iso for both RHEL7 & RHEL8 and copy them onto the disconnected Satellite server, along with the RHEL8.8 DVD iso.

Create the directories for all the media and mount the images to the respective directories:

# mkdir -p /media/{rhel-8,satellite-6.11-rhel8,satellite-6.11-rhel7}

# mount -o loop /var/isos/rhel-8.8-x86_64-dvd.iso /media/rhel-8

# mount -o loop /var/isos/Satellite-6.11.5.4-rhel-8-x86_64.dvd.iso /media/satellite-6.11-rhel8

# mount -o loop /var/isos/Satellite-6.11.5.4-rhel-7-x86_64.dvd.iso /media/satellite-6.11-rhel7Mount all of the isos into the respective directories, once this is done – the next thing to do is to create the repositories for the server to use.

Setup the RHEL7, Satellite 6.11 Repositories

Create the yum repo files and ensure the base urls point to file:///media/<repo> :

# cat <<EOF > /etc/yum.repos.d/satellite-6.11-rhel7.repo[satellite-6.11-rhel-7] baseurl=file:///media/satellite-6.11-rhel7/Satellite name=Satellite-6.11-rhel8 enabled=1 gpgcheck=0[satellite-maintence-6.11-rhel-7] baseurl=file:///media/satellite-6.11-rhel7/Maintenance/ name=Satellite-Maintenance-6.11-rhel8 enabled=1 gpgcheck=0 [ansible-rhel-7]baseurl=file:///media/satellite-6.11-rhel7/ansible/ name=Ansible-rhel7 enabled=1 gpgcheck=0[rhel-7-server-rhscl-rpms] baseurl=file:///media/satellite-6.11-rhel7/RHSCL/ name=rhel-7-software-collections enabled=1 gpgcheck=0 EOF

A yum repo list will now show all of the necessary repositories for upgrading the Satellite Server.

Upgrade Satellite Server to 6.11

We are now in a position to update the Satellite server to 6.11. Follow these instructions to upgrade the Satellite Server to 6.11. Steps 12 to 16 have been completed above. Every environment is different, so follow the instructions necessary for your environment. This guide is only showing you how to get your disconnected Satellite in a position where it can be upgraded.

Depending on how you got to Satellite 6.10 (i.e. Upgrade from 6.9 or fresh install of 6.10) you may need to create the /var/lib/pulp/media/artifact directory:

# mkdir -p /var/lib/pulp/media/artifact

# chown -hR pulp:pulp /var/lib/pulp/media/artifactRun the command to check that there is an upgrade available:

# satellite-maintain upgrade list-versions

# satellite-maintain upgrade check --target-version 6.11 \

--whitelist="repositories-validate,repositories-setup"You may have to add a few whitelists, for example if you have any non Red Hat packages. Once satisfied that all the checks are successful, do a backup / snapshot then commence the upgrade by running the following command:

# satellite-maintain upgrade run --target-version 6.11 \

--whitelist="repositories-validate,repositories-setup"This upgrade process should complete in approx 45 mins, you may be asked if you want to clean up old tasks – agree to that, and by the end of it you will now be running up to date rhel7.9 & Satellite 6.11

The next step is to upgrade from Red Hat Enterprise Linux 7 to Red Hat Enterprise Linux 8.

This is described in part 2 ->

I like sharing my knowledge and experiences, in the hope that is helps others. Feel free to add comments / suggestions and if you found that this was helpful, and would like to shout me a coffee, that would be appreciated!